How Do I Create an Attribute MSA Report in Excel Using SigmaXL?

Attribute MSA is also known as Attribute Agreement Analysis. Use

the Nominal option if the assessed result is numeric or text nominal

(e.g., Defect Type 1, Defect Type 2, Defect Type 3). There must be

at least 3 response levels in the assessed result, otherwise it is

binary.

- Open the file Attribute MSA – Nominal.xlsx. This is a Nominal MSA example with 30 samples, 3 appraisers and 2 trials. The response is text “Type_1”, “Type_2” and “Type_3.” The Expert Reference column is the reference standard from an expert appraisal. Note that the worksheet data must be in stacked column format and the reference values must be consistent for each sample.

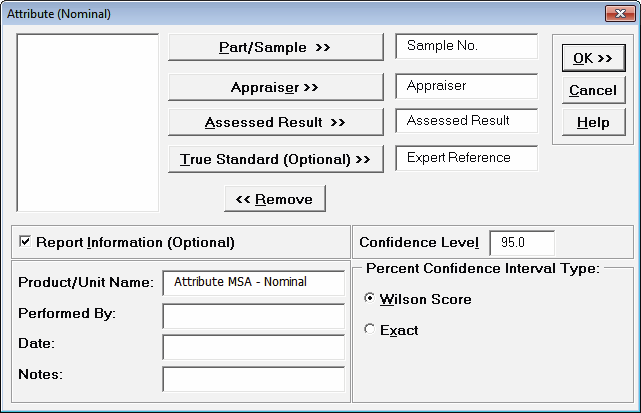

- Click SigmaXL > Measurement Systems Analysis > Attribute MSA (Nominal). Ensure that the entire data table is selected. Click Next.

- Select Sample No., Appraiser,

Assessed Result and Expert Reference as shown.

Check Report Information and enter

Attribute MSA – Nominal for Product/Unit Name.

Select Percent Confidence Interval Type – Wilson Score:

- Click OK. The Attribute MSA Nominal Anlaysis Report is produced.

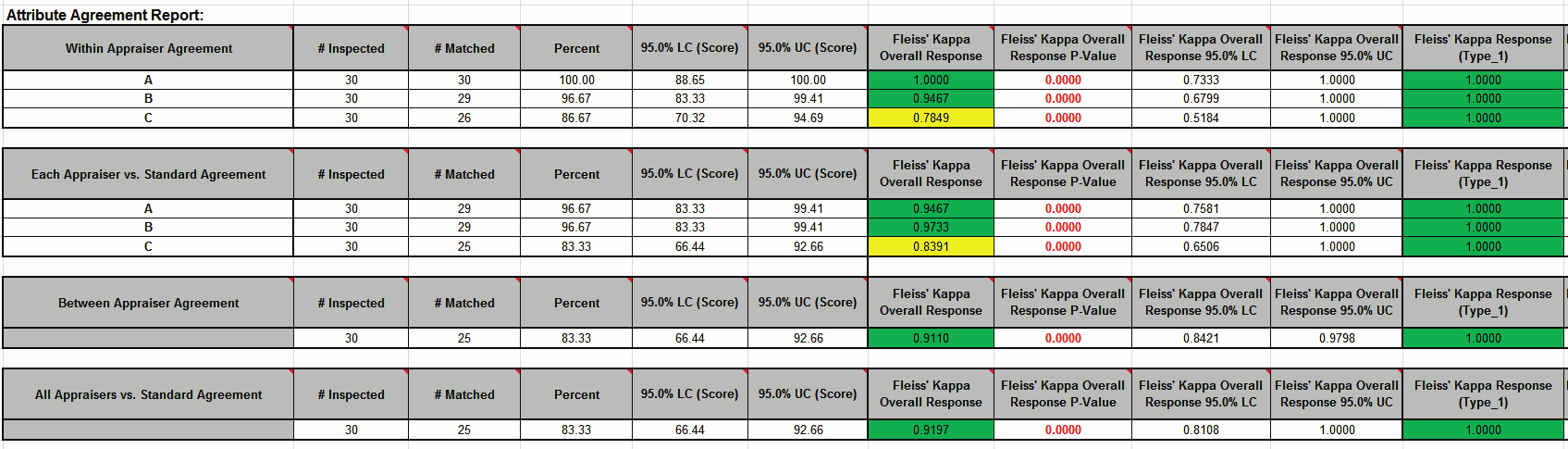

Tip: While this report is quite extensive, a quick assessment of the attribute measurement system can be made by viewing the Kappa color highlights: Green - very good agreement (Kappa >= 0.9); Yellow - marginally acceptable, improvement should be considered (Kappa 0.7 to < 0.9); Red - unacceptable (Kappa < 0.7). See Attribute MSA (Binary) for a detailed discussion of the report graphs and tables. Here we will just look at Kappa.

Attribute Agreement Report:

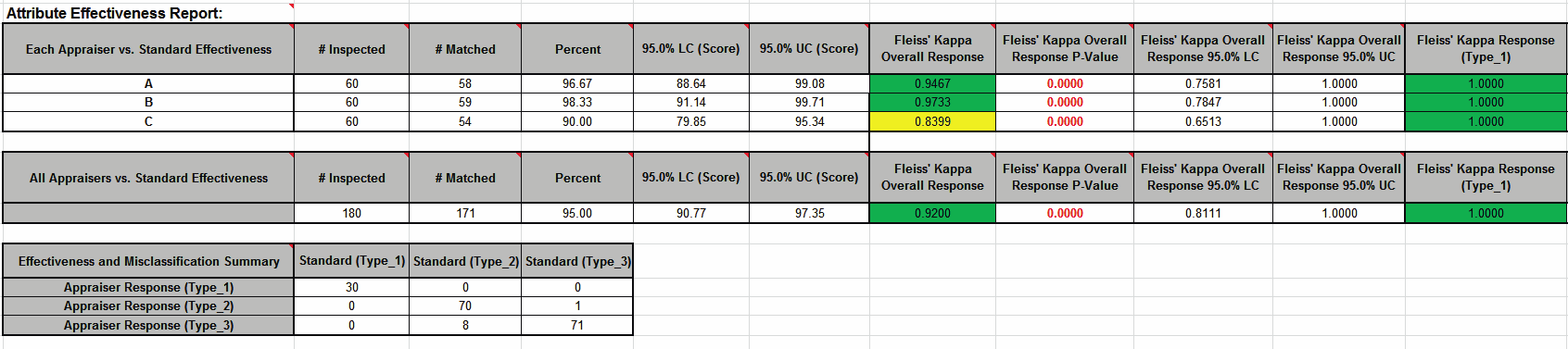

Attribute Effectiveness Report:

Fleiss’ Kappa statistic is a measure of agreement that is analogous to a “correlation coefficient” for discrete data. Kappa ranges from -1 to +1: A Kappa value of +1 indicates perfect agreement. If Kappa = 0, then agreement is the same as would be expected by chance. If Kappa = -1, then there is perfect disagreement. “Rule-of-thumb” interpretation guidelines: >= 0.9 very good agreement (green); 0.7 to < 0.9 marginally acceptable, improvement should be considered (yellow); < 0.7 unacceptable (red). See Appendix Kappa for further details on the Kappa calculations and “rule-of-thumb” interpretation guidelines.

Fleiss’ Kappa P-Value: H0: Kappa = 0. If P-Value < alpha (.05 for specified 95% confidence level), reject H0 and conclude that agreement is not the same as would be expected by chance. Significant P-Values are highlighted in red.

Fleiss' Kappa LC (Lower Confidence) and Fleiss' Kappa UC (Upper Confidence) limits use a kappa normal approximation. Interpretation Guidelines: Kappa lower confidence limit >= 0.9: very good agreement. Kappa upper confidence limit < 0.7: the attribute agreement is unacceptable. Wide confidence intervals indicate that the sample size is inadequate.

Fleiss’ Kappa Overall is an overall Kappa for all of the response levels.

Fleiss’ Kappa Individual gives Kappa for each response level (Type_1, Type_2 and Type_3). This is useful to identify if an appraiser has difficulty assessing a particular defect type.

This is a very good attribute measurement system, but Appraiser C is marginal so a refresher would be helpful.

Web Demos

Our CTO and Co-Founder, John Noguera, regularly hosts free Web Demos featuring SigmaXL and DiscoverSim

Click here to view some now!

Contact Us

Phone: 1.888.SigmaXL (744.6295)

Support: Support@SigmaXL.com

Sales: Sales@SigmaXL.com

Information: Information@SigmaXL.com